A Must-Read for Anyone Who Handles Tera-to-Petabytes of Data

Break through the toughest data limitations

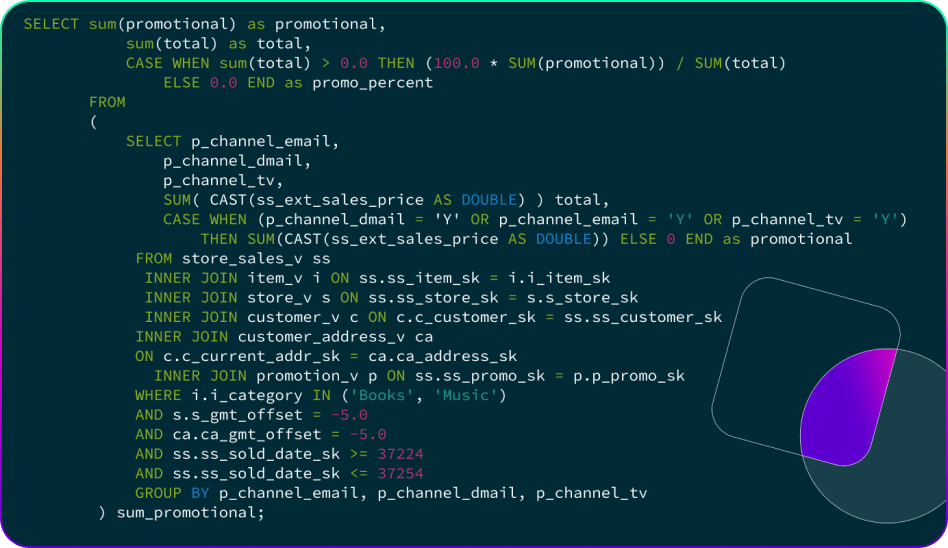

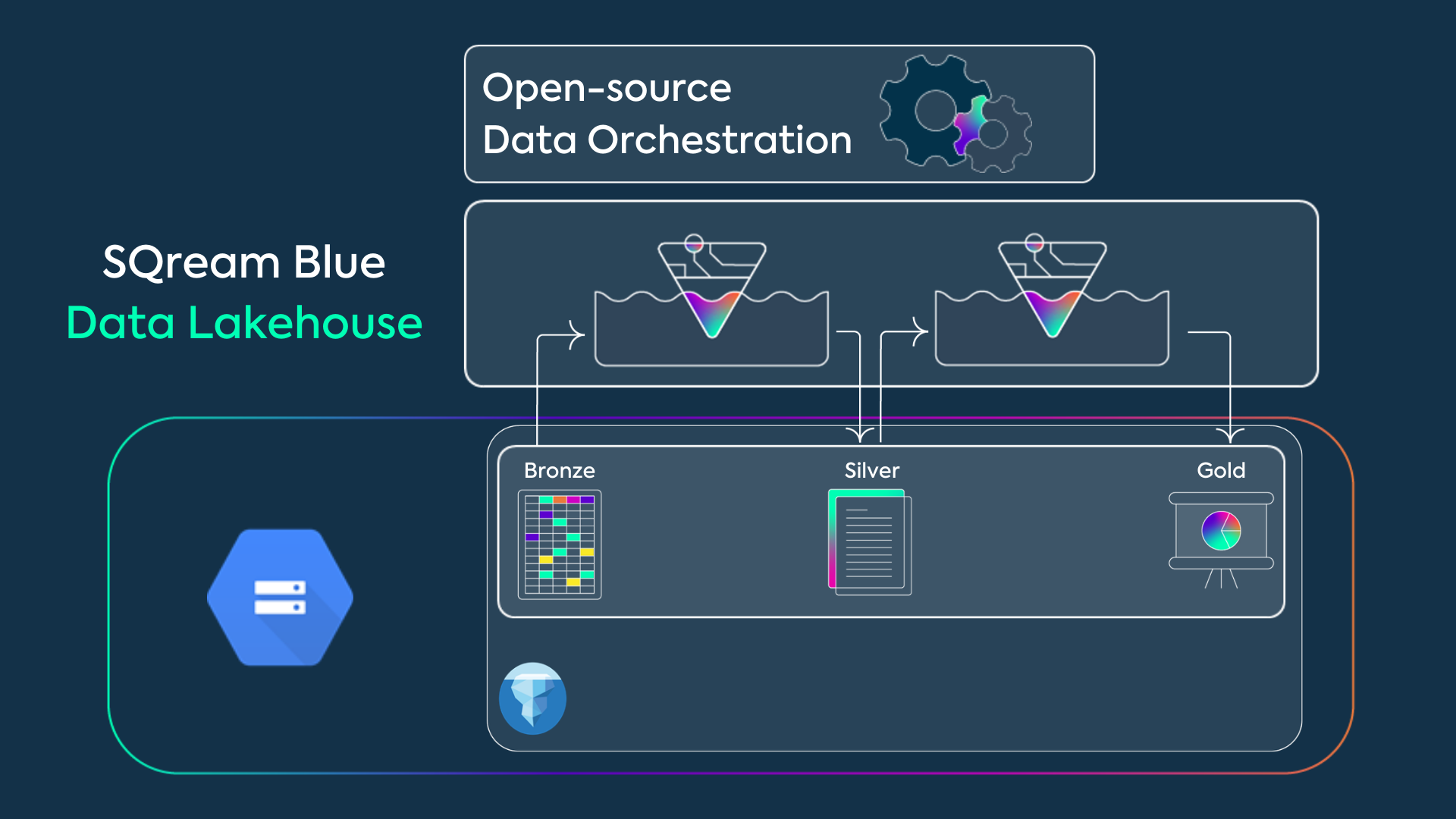

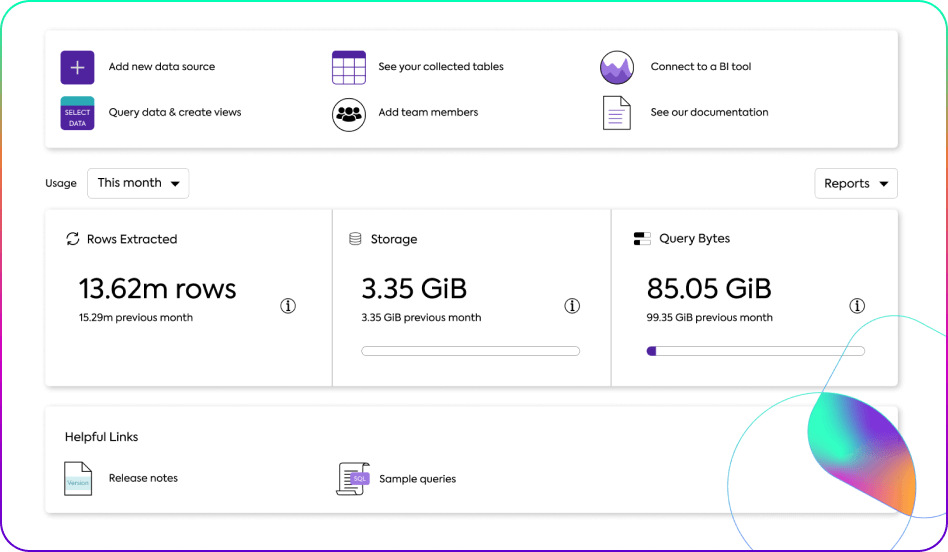

From painful compromises on time and complexity to heavy investments, querying big data has limits that keep your business behind. SQream's GPU-acceleration empowers businesses to overcome those limits

Read More

Experience the Power of Petabyte scale SQL on GPU

Participate in SQream Innovation Lab

Are you a data leader and innovation enthusiast? Learn how to run large and complex queries faster than ever using the power of GPU

Join Now

Analyze ALL your data, at lightning speed , at a fraction of the cost

There Is No Such Thing as Too Much Data

Struggling with overwhelming data volumes, complex queries, and slow processing times? Meet SQream

Learn more & Book a meeting

scroll

down

down