Introduction

Imagine a lone cyclist battling against the wind, pushing forward on the road, tackling tasks one at a time with efficiency and determination. This is a fitting analogy for the Central Processing Unit (CPU) in data processing. However, the Graphics Processing Unit (GPU) is more akin to a powerful peloton, a group of riders working in perfect synchronization. With its multitude of cores, the GPU excels at parallelizing tasks, much like the peloton collaboratively shares the workload, enabling it to surge ahead and conquer complex challenges with remarkable speed and efficiency.

If you’re struggling to grasp this analogy, you can always go back to the well-known video by NVIDIA and the Mythbusters:

The Advantages of SQreamDB Architecture

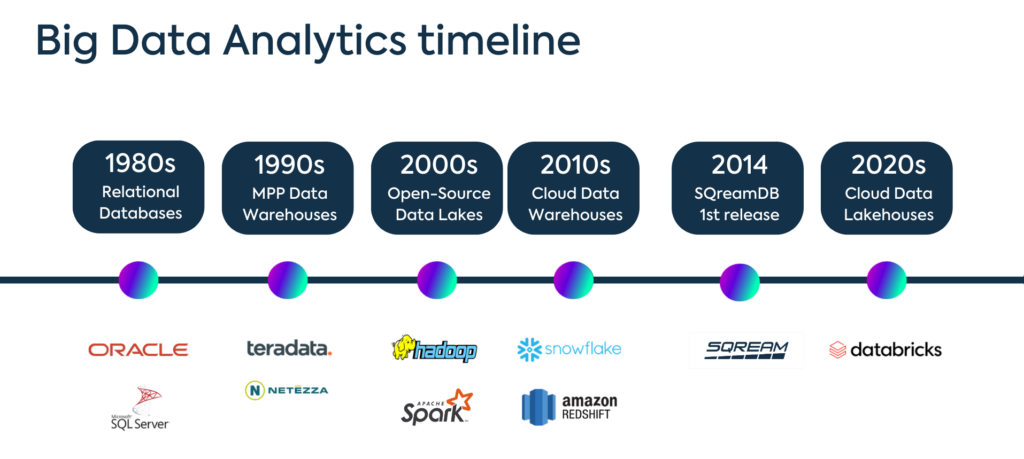

When SQream was founded in 2010, the trend was to solve big data problems with Apache Hadoop ecosystem open-source tools. These tools stepped away from using SQL in favor of programming languages such as Java and Scala and “sanctified” the coupling of compute and storage in numerous physical nodes. However, SQream founders saw the need to expand ACID-compliant databases to support big data projects while keeping the simplicity and well-known SQL interface and analytics.

SQream’s processing technology is differentiated both from the old-school Hadoop ecosystem and from the modern cloud data warehouses. We provide everything you could expect from the latter, but with a significantly better cost-performance. But how do we achieve this? We take a whole different approach when dealing with some of the common bottlenecks of big data analytics.

I/O Bottleneck

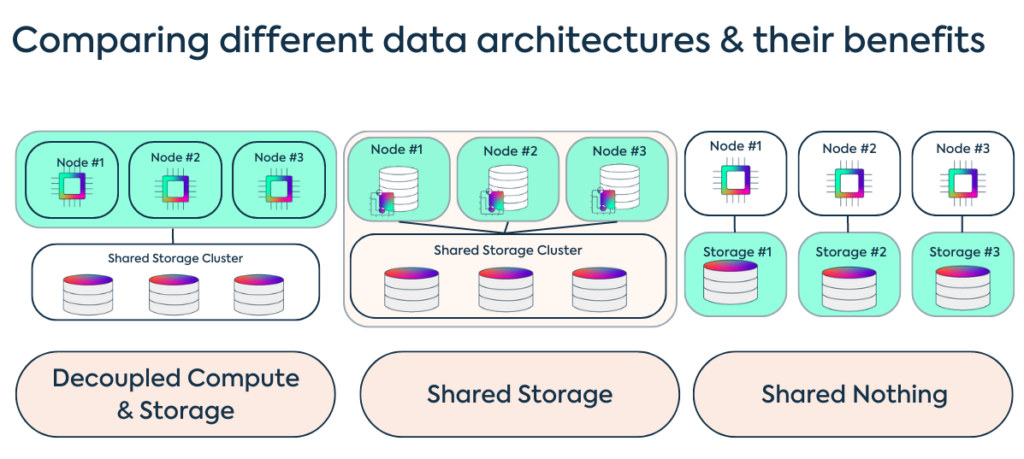

I/O bottleneck occurs when your system’s throughput is not enough for the number of tasks it is processing. Hadoop-based MPP frameworks solved it with a Shared Nothing architecture, with each node processing its assigned tasks based on its local storage while communicating with others during execution. Cloud data warehouses solved it also with a hybrid approach of Shared Nothing and Shared Storage architecture, where storage and compute are completely layered in a decoupled manner.

SQreamDB, however, is based upon a Shared Storage architecture, where all the compute nodes are linked to the same storage, but have their own private memory & compute. This way, scalability and flexibility are maintained through enhanced resource utilization and simplified management of data.

Memory Bottleneck

For data to be processed, it must be transferred from the storage, to the RAM, and from there to the CPU. This flow of data between memory layers often involves latency. Hadoop-based systems tried to break the limits of “in-memory” processing with caching, partitioning, and storing data in-memory in an efficient way. Cloud data warehouses tried to solve it with columnar logic, which means identifying the data that is actually needed to answer the query at hand, and only moving this specific part into the RAM and the CPU.

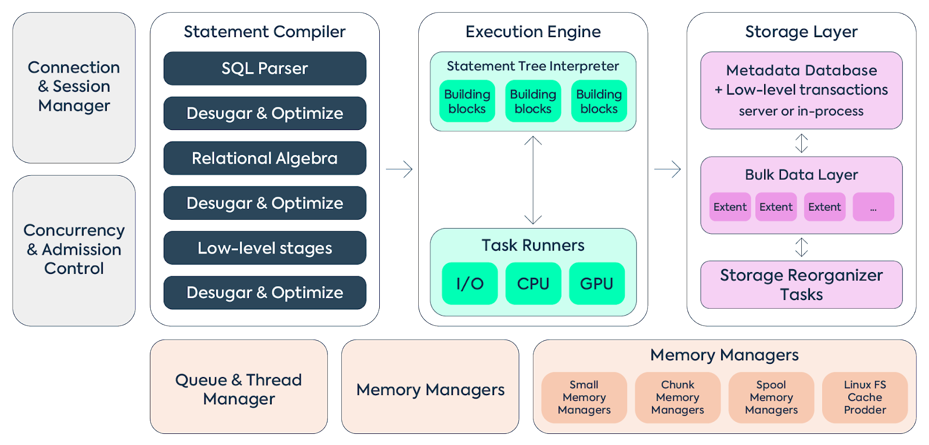

SQreamDB, however, has a patent-protected technology for zero-latency data flow between all 3 layers of RAM, CPU, and GPU RAM. With much more resources available, SQreamDB is passing the GPU only the most complex tasks that require parallelism.

Scaling Bottleneck

The limitation of dealing with increasing data volumes and workloads is often solved by scaling-out the number of nodes in place, causing enormous infrastructure spending. Hadoop-based systems distribute data processing between multiple nodes that work together to store and query data. Cloud data warehouses split the data into smaller chunks, with each node processing its portion simultaneously and independently.

SQreamDB’s GPU acceleration technology enables the exact parallelism on the chip itself (MPP-on-chip). Thus, every GPU can be divided into workers who work on the same task simultaneously, so every compute unit can be logically split into several without actually installing more nodes.

Optimization Bottleneck

Ongoing (usually manual) maintenance of data to achieve better performance is usually time-consuming and delays the analytics workflow. Hadoop-based systems, partitioned by the original design of numerous compute and storage resource nodes, require data to be partitioned and indexed manually. Cloud data warehouses often offer built-in optimizations for partitioning and indexing, but the user still has to define the desired keys and schema. This manual configuration improves the query performance, but it’s not always valid for all query types.

SQreamDB, on the other hand, automatically partitions column data as chunks, which are compressed to disk using a wide variety of CPU or GPU compression algorithms. SQreamDB automatically chooses the algorithm that best fits the input data. Moreover, SQreamDB saves metadata and statistics of each chunk (equal to indexing), allowing efficient and flexible data skipping with no preliminary knowledge of the queries that would be running.

Summary

While Hadoop and MPP databases tend to be quite demanding in terms of hardware resources they require, SQreamDB is able to leverage significantly fewer resources to achieve the same (or even better) performance. This is possible due to SQreamDB’s use of General Purpose GPU(s) (GP-GPU), and the ability to significantly reduce statement execution time by parallelizing intermediate steps.

In contrast to traditional data warehouses and even NoSQL platforms, which tightly couple storage and compute together on the same nodes, SQream decouples storage and compute, enabling scale-out of specific components to achieve linear scale. Scaling these traditional systems for additional concurrent users, more complex queries, or additional data storage can be challenging and lead to performance limitations. SQream’s decoupling approach provides greater flexibility and improved scalability. The solution is practical and built to support the needs of modern enterprises, empowering them with faster data processing and more advanced analytics capabilities.