A short glimpse at Wikipedia tells you, “Benchmarking is the practice of comparing business processes and performance metrics to industry bests and best practices from other companies. Dimensions typically measured are quality, time and cost.”

Over the past year, SQream started taking the “art” of benchmarking seriously, as part of migrating its data analytics platform into the cloud, creating its hybrid offering. First, our efforts were mostly internal, trying to understand whether our product showed any value compared to other cloud data analytics giants, such as Amazon Redshift, Google BigQuery, Microsoft Azure Synapse or Snowflake.

Second, after gaining more experience and becoming more familiar with the TPC benchmarks (Transaction Processing Performance Council) we were proud of our results and generally shared them on all our customer-facing interactions (and of course on our website).

Why Data Analytics Benchmarks Get Us Excited

So, what is it about data analytics benchmarking and comparing results that gets us so excited? Well, it is the foundation of every business decision-making process taking place prior to purchasing a product, even more so if it’s a data platform that requires some adaptations to connect to your existing architecture. Most companies won’t just replace all their data stack at once, so benchmarks help them understand why they should even consider talking to us.

Keeping Benchmarking Objective

If there is one thing I’ve learned after swimming in the data analytics benchmarks pool for almost a year, it is that no one is 100% objective. The last clash (November 2021) between Snowflake and Databricks regarding their performance on the TPC-DS 100TB benchmark is a great example of this.

At SQream we are doing our best to compare “apples-to-apples,” but let’s be honest – cloud data warehouse vendors are making it very hard for you to benchmark against them objectively. You could say that you have better odds at winning “Guess Who?” then at speculating which model of CPUs Amazon Redshift is using, how many nodes are in a Snowflake X-Large warehouse, or the RAM of Azure Synapse’s DW1500c service level.

Under these circumstances, we still did our best to maintain our integrity while testing SQream against other vendors in our own lab, by the hands of our own big data engineers. Another method we utilize in the attempt to win a specific prospect is trying to beat benchmark results that other vendors are posting, or which are being posted by third-parties (see for example McKnight Consulting Group or SecureKloud).

The Real Litmus Test

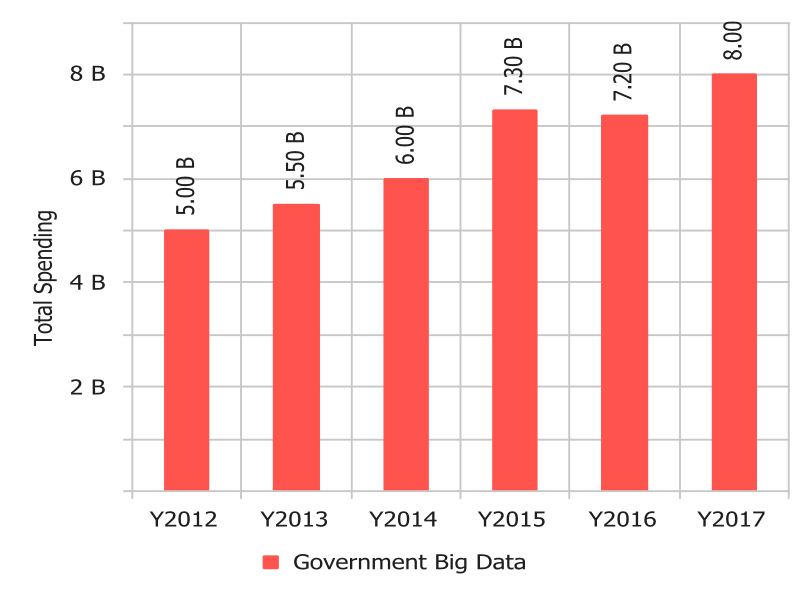

Besides relying on well-exercised benchmarks like those of the TPC, when meeting a prospect we also try to present results that are relevant to it. One good example is the telco network planning use case, in which we demonstrated during a POC we did for a Tier-0 European telco operator. It included 13 queries on a ~11.5TB dataset and demonstrated the process of how a telco decides where to establish new cell towers when planning a new network.

Getting our hands dirty – we configured both on-premise and public cloud environments (a total of 4 environments with equal cost) to prove that we could beat the existing solution the telco was using from a cost/performance perspective.

On-premise Dell Xeon |

On-premise IBM Power9 |

Cloud 1 node |

Cloud 4 nodes |

|

Storage |

StorONE TRU storage Dual node HA 10U |

StorONE TRU storage Dual node HA 10U |

Cloud storage services

|

Cloud storage services

|

RAM |

2 TB

|

1.5 TB

|

688 GB

|

688 GB

|

GPU |

4x V100

|

4x V100

|

4x V100

|

4x V100

|

Workers |

16

|

16

|

8

|

16

|

Running the 13 queries presented us with results that surprised both us and the prospect:

Current cloud solution |

On-premise Dell Xeon |

On-premise IBM Power9 |

Cloud 1 node |

Cloud 4 nodes |

2 hours 59 minutes |

x1.8 faster

|

x2.7 faster

|

x1.26 slower

|

x1.7 faster

|

The prospect was highly satisfied with the results and thrilled with the opportunity to implement a hybrid solution for the specific use case. They couldn’t believe that GPUs could accelerate their existing on-cloud architecture and significantly improve the total cost/performance. Once we finished the POC for the prospect, we took the same use case and ran it on different cloud services as a benchmark against other vendors:

So just remember, next time you consider replacing or adding a component of your architecture, requesting a benchmark as a part of the decision-making process is the natural thing to do. After a benchmark is presented to you, make sure you also read the ‘small letters’ and ask the right questions to understand the objectivity of the vendor. Whatever your final decision will be, I hope it includes not only benchmark results, but also other tool interoperability considerations, customer references, security capabilities, supported features, and flexibility for different business users.